For decades, developers argued about whether a programming language was compiled or interpreted. Today, that question doesn’t even make sense anymore.

We’ve entered the age of language runtimes, a world where your code might be compiled, interpreted, JIT-compiled, optimized at runtime, cached, sandboxed, or even executed inside another runtime (inside another runtime).

If you read my earlier post, “Beyond Compiled vs Interpreted”, this one picks up right where that left off. Back then, we looked at how the old definitions no longer fit.

Now, let’s explore what replaced them: the powerful world of language runtimes.

1. The Classical Divide: Compilers vs Interpreters

In the early decades of computing, things were simpler. You wrote code in a high-level language, passed it through a compiler, and the result was a machine-language executable you could run directly. This was the world of C, Fortran, Pascal, and Assembly, where every line you wrote had a clear connection to binary instructions.

On the other side were interpreted languages. These did not translate code into machine instructions ahead of time. Instead, an interpreter read your source code line by line, parsing and executing it while the program ran. BASIC, Lisp, and early versions of Python and Perl worked this way. It was more flexible but slower because translation happened during execution.

This clear distinction made sense when computing power was limited. Compilers were heavy and slow to run, while interpreters were convenient but inefficient. Every cycle of CPU time mattered. If you wanted performance, you used a compiled language. If you wanted flexibility, you used an interpreted one.

As systems became faster and memory cheaper, developers wanted both speed and flexibility. That desire blurred the line between the two categories.

2. Then Came the Runtime Revolution

A runtime environment changed everything. Instead of viewing code as something that had to be fully translated before running, runtimes created a managed layer where programs could be executed dynamically, optimized, and even recompiled while they ran.

A runtime is not just an interpreter. It is an environment that handles tasks such as memory management, garbage collection, type checking, exception handling, JIT compilation, and dynamic optimization.

This marked the beginning of managed execution, where the environment itself takes care of performance, safety, and cross-platform portability.

Languages were no longer simply compiled or interpreted. They became runtime managed, meaning the environment continuously analyzed, compiled, and optimized code as needed.

That philosophy defined the modern era of programming, starting with one of the most influential technologies ever built: the Java Virtual Machine.

3. Java and the Birth of Bytecode

When Java launched in 1995, it came with a bold promise: “Write Once, Run Anywhere.”

That promise was powered by a new idea called bytecode. Instead of compiling Java directly into platform-specific machine code, the Java compiler transformed source code into .class files containing portable bytecode instructions.

These bytecodes were not raw CPU instructions. They formed an intermediate language executed by the Java Virtual Machine (JVM). The JVM interpreted or JIT compiled the bytecode into native machine code, depending on the system and workload.

This changed everything. Developers could write a program on Windows, move it to Linux or macOS, and it would run without modification. The JVM handled the rest.

Beyond portability, the JVM introduced performance intelligence. Later versions, such as HotSpot, added adaptive optimization, allowing the runtime to monitor frequently used code and selectively compile those sections into highly optimized native code.

Java made it possible to start slow and finish fast. Developers could ship portable code, and the runtime itself would improve its performance as it ran. That idea of combining portability with optimization became the foundation of many modern languages.

4. .NET and Managed Execution

Microsoft’s .NET Framework took the concept of the JVM and expanded it into a cross-language platform. Introduced in the early 2000s, .NET’s Common Language Runtime (CLR) could execute multiple programming languages such as C#, VB.NET, and F#. All of them compiled into a single intermediate form called MSIL (Microsoft Intermediate Language).

The CLR’s job was to JIT compile that MSIL into machine code at runtime. It also provided automatic memory cleanup, exception handling, metadata reflection, and cross-language type safety.

Developers could mix and match languages within the same project. A class written in C# could interact seamlessly with one written in VB.NET. Everything worked within the same managed environment.

In modern .NET (now open source and cross-platform through .NET Core), the runtime can choose between JIT compilation and ahead-of-time compilation, depending on deployment needs. Developers can run code dynamically for flexibility or compile it fully for performance.

With Java and .NET setting the standard, managed execution became a core philosophy in software design. It influenced everything from JavaScript engines to game engines and even AI runtimes.

5. JavaScript: The Most Optimized Interpreted Language

No language demonstrates the evolution of runtimes better than JavaScript. It was created in just ten days for early web browsers and was originally a lightweight, fully interpreted scripting language.

Today, engines like Google’s V8, Mozilla’s SpiderMonkey, and Apple’s JavaScriptCore have turned JavaScript into one of the most optimized languages ever built. They do not just interpret code. They analyze, compile, and re-optimize it in real time.

When you run a JavaScript program, the process looks like this. The engine parses the code into an abstract syntax tree, converts that tree into bytecode, and starts executing it immediately. A profiler then watches which functions are used most frequently and sends them to a JIT compiler. That compiler translates the hot code paths into native machine code. If patterns change, the runtime can recompile or de-optimize those functions on the fly.

This dynamic process makes JavaScript incredibly fast for a language that began as a simple interpreter. Modern JavaScript often performs close to compiled languages in practical applications.

Each time you load a web app, your browser becomes a compiler, interpreter, and optimizer working together to deliver the smooth experience we now take for granted.

6. Python, PyPy, and the Rise of Meta Interpreters

Python started as a straightforward interpreted language that executed bytecode through CPython. As its popularity exploded, performance became a growing concern, leading to the creation of multiple alternative runtimes.

PyPy introduced a breakthrough approach called meta-tracing JIT compilation. It analyzes which sections of a program run most frequently and compiles them into machine code for faster performance.

Projects such as Cython and Nuitka allow Python developers to compile scripts ahead of time into C or C++ extensions, significantly improving speed.

Other implementations, like IronPython for .NET and Jython for the JVM, further prove that Python is no longer tied to a single runtime.

Depending on which implementation you choose, Python can be interpreted, JIT compiled, or fully compiled ahead of time. The same code adapts to different execution models, demonstrating that performance now depends more on the runtime than the language itself.

7. WebAssembly: The New Portable Runtime

If the JVM made code portable across operating systems, WebAssembly (WASM) makes it portable across the entire web.

WebAssembly is a low-level binary instruction format designed for efficient execution inside web browsers. Over time, it has grown beyond the web, becoming a universal runtime for secure and high-performance execution across platforms.

Languages such as C, C++, Rust, Go, and even Python can compile to WASM. The browser or host environment then executes the bytecode safely within a sandbox. This isolation gives near-native performance while maintaining security.

Because WASM is deterministic, it avoids many performance issues that traditional interpreters face. When combined with the WebAssembly System Interface (WASI), it extends to servers, IoT devices, and even AI workloads.

In many ways, WebAssembly is the modern version of “Write Once, Run Anywhere,” not for one language but for all of them.

8. The Modern Spectrum

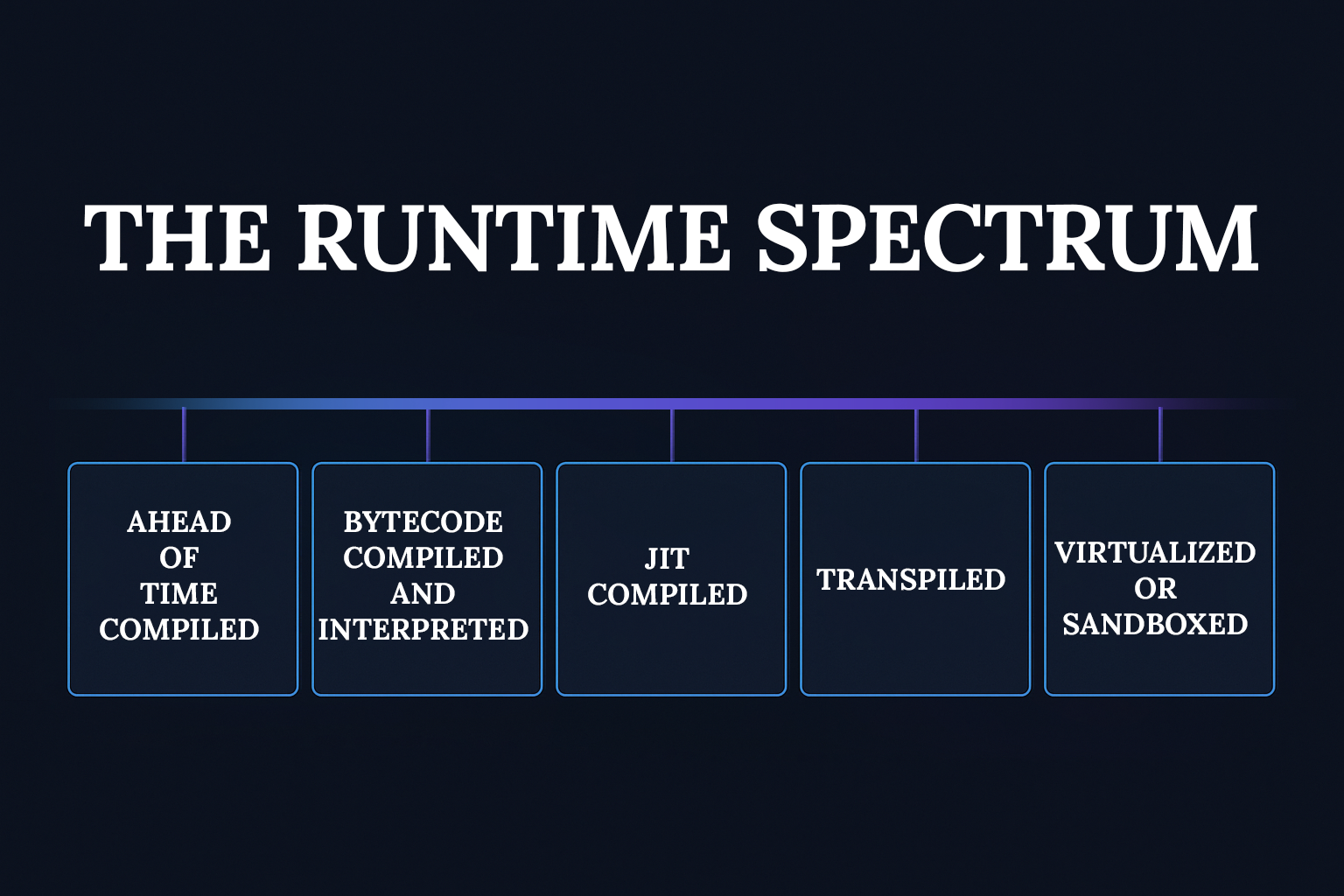

The world of programming no longer divides languages into compiled or interpreted categories. Instead, they exist along a wide spectrum of execution models.

| MODEL | EXAMPLE | NATURE |

| Ahead-of-time compiled | C, Rust | Directly compiled to machine code for speed and control |

| Bytecode compiled and interpreted | Python, Java | Uses intermediate bytecode for flexibility and portability |

| JIT compiled | JavaScript, C#, PyPy | Compiles hot paths during runtime for adaptive performance |

| Transpiled | TypeScript, Babel | Converts higher-level code into another language for compatibility |

| Virtualized or sandboxed | WebAssembly | Executes safely in a controlled environment |

Each model offers a unique balance of portability, speed, and safety. Modern runtimes can even switch between models depending on workload, giving developers the best of all worlds.

9. Why This Matters

Understanding how runtimes work has become essential for developers. It influences how we write, structure, and optimize our code.

Knowing what happens behind the scenes helps you make better decisions about performance, portability, and security. You can decide when to precompile for speed, when to rely on runtime optimization, and when to isolate processes for safety.

AI frameworks like TensorFlow and PyTorch rely on dynamic runtimes that compile mathematical graphs on the fly. Mobile frameworks such as Flutter and React Native use managed runtimes to balance performance and flexibility. Even blockchain smart contracts depend on deterministic runtimes such as EVM and WASM for security.

In modern computing, the runtime defines how your code behaves. It determines how fast, how portable, and how reliable it will be.

10. Conclusion: The Runtime Is the New Compiler

The old question “Is it compiled or interpreted?” no longer applies. The better question is “How does its runtime execute and optimize the code?”

Every modern language falls somewhere between the two extremes. Some compile early, others compile late, and some continuously optimize during execution.

From the JVM and CLR to V8 and WebAssembly, runtimes have evolved into living systems that act as compiler, interpreter, and optimizer all at once.

Your source code does more than run; it adapts. It learns. It improves itself while executing.

That is why this truly is the Age of Language Runtimes, where everything compiles and interprets.